Visual Ranker

The template provides the workflow to rank the quality of the text-to-image models responses, like Dall-E, Midjourney, Stable Diffusion etc.

Using this template gives the ability to compare the quality of the responses from different generative AI models, and rank the dynamic set of items with handy drag-and-drop interface.

This is helpful for the following use cases:

- Categorize the responses by different types: relevant, irrelevant, biased, offensive, etc.

- Compare and rank the quality of the responses from different models.

- Evaluate results of semantic search

- Personalisation and recommendation systems, marketplace product search

How to create the dataset

Collect a prompt and a list of images you want to display in each task in the following form:

[{

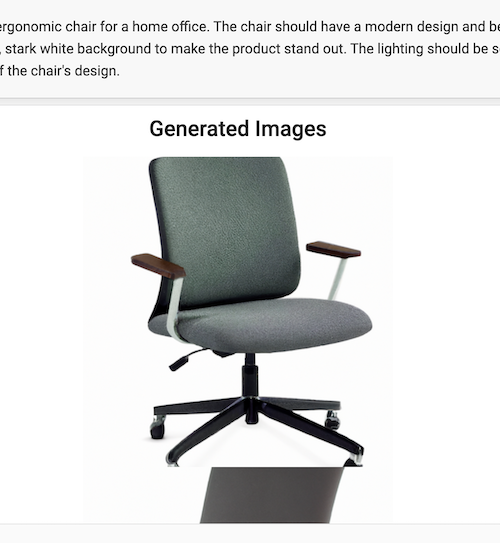

"prompt": "Generate a high-quality image of a stylish, ergonomic chair for a home office. ",

"images": [

{

"id": "chair_1",

"html": "<img src='/static/samples/chairs/chair1.png'/>"

},

{

"id": "chair_2",

"html": "<img src='/static/samples/chairs/chair2.png'/>"

},

{

"id": "chair_3",

"html": "<img src='/static/samples/chairs/chair3.png'/>"

},

{

"id": "chair_4",

"html": "<img src='/static/samples/chairs/chair4.png'/>"

}

]

}, ...]Each each contain "html" field where you can specify the path to the image you want to display.

This is a generic HTML renderer, so you can use any HTML tags here.

Collect dataset examples and store them in dataset.json file.

Starting your labeling project

Need a hand getting started with Label Studio? Check out our Zero to One Tutorial.

- Create a new project in Label Studio

- Go to

Settings > Labeling Interface > Browse Templates > Generative AI > LLM Ranker - Save the project

Alternatively, you can create project by using python SDK:

import label_studio_sdk

ls = label_studio_sdk.Client('YOUR_LABEL_STUDIO_URL', 'YOUR_API_KEY')

project = ls.create_project(title='Visual Ranker', label_config='<View>...</View>')Import the dataset

To import dataset, in the project settings go to Import and upload the dataset file dataset.json.

Using python SDK you can import the dataset with input prompts into Label Studio. With the PROJECT_ID of the project

you’ve just created, run the following code:

from label_studio_sdk import Client

ls = Client(url='<YOUR-LABEL-STUDIO-URL>', api_key='<YOUR-API_KEY>')

project = ls.get_project(id=PROJECT_ID)

project.import_tasks('dataset.json')Setup the labeling interface

Use the following configuration for the labeling interface:

<View>

<Style>

<!-- Customize your CSS styles here -->

</Style>

<View className="product-panel">

<Text name="prompt" value="$prompt"/>

</View>

<View>

<List name="generated_images" value="$images" title="Generated Images" />

<Ranker name="rank" toName="generated_images">

</Ranker>

</View>

</View>It includes the following elements:

<Text>that defines the prompt for the task<List>that displays the list of generated images to assess<Ranker>that adds functionality to rerank the images

Export the dataset

Labeling results can be exported in JSON format. To export the dataset, go to Export in the project settings and download the file.

Using the Python SDK you can export the dataset with annotations from Label Studio.

annotations = project.export_tasks(format='JSON')The output of annotations in "value" is expected to contain the following structure:

"value": {

"ranker": {

"rank": [

"chair_2",

"chair_4",

"chair_3",

"chair_1"

]

}

}The items in the list are “id” of the images, sorted in the ranked order