Model provider API keys for organizations

To use certain AI features across your organization, you must first set up a model provider. You can set up model providers from Organization > Settings.

For example, if you want to interact with an LLM when using the <Chat> tag, you will first need to configure access to the model.

note

These models are not used with the Label Studio AI Assistant.

About adding model providers

User access

Once added, these model providers can be used by anyone in your organization who is also using an AI-powered workflow.

However, only users with access to the organization settings (Owner and Admins) can add and configure models.

Whitelisting network access

If you are restricting network access to your resource, you may need to whitelist HumanSignal IP addresses (IP ranges on SaaS) when configuring network security.

Approaches

There are two approaches to adding a model provider API key.

In one scenario, you get one provider connection per organization, and this provides access to a set of whitelisted models. Examples include:

- OpenAI

- Vertex AI

- Gemini

- Anthropic

In the second scenario, you add a separate API key per model. Examples include:

- Azure OpenAI

- Azure AI Foundry

- Custom

Supported base models

| Provider | Supported models |

|---|---|

| OpenAI | gpt-5-2 gpt-5.1 gpt-5 gpt-5-mini gpt-5-nano |

| Gemini | gemini-2.5-pro gemini-2.5-flash gemini-2.5-flash-lite gemini-2.0-flash gemini-2.0-flash-lite |

| Vertex AI | gemini-2.5-pro gemini-2.5-flash gemini-2.5-flash-lite gemini-2.0-flash gemini-2.0-flash-lite |

| Anthropic | claude-3-5-haiku-latest claude-3-5-sonnet-latest claude-3-7-sonnet-latest |

| Azure OpenAI | Azure OpenAI chat-based models Note: We recommend against using GPT 3.5 models, as these can sometimes be prone to rate limit errors and are not compatible with Image data. |

| Azure AI Foundry | We support all Azure AI Foundry models. |

| Custom | Custom LLM |

Add a model provider

note

If you have already configured model providers to use with Prompts, those will automatically be added to your organization-level providers.

OpenAI

You can only have one OpenAI key per organization. This grants you access to set of whitelisted models. For a list of these models, see Supported base models.

If you don’t already have one, you can create an OpenAI account here.

You can find your OpenAI API key on the API key page.

Gemini

You can only have one Gemini key per organization. This grants you access to set of whitelisted models. For a list of these models, see Supported base models.

For information on getting a Gemini API key, see Get a Gemini API key.

Vertex AI

You can only have one Vertex AI key per organization. This grants you access to set of whitelisted models. For a list of these models, see Supported base models.

Follow the instructions here to generate a credentials file in JSON format: Authenticate to Vertex AI Agent Builder - Client libraries or third-party tools

The JSON credentials are required. You can also optionally provide the project ID and location associated with your Google Cloud Platform environment.

Anthropic

You can only have one Anthropic key per organization. This grants you access to set of whitelisted models. For a list of these models, see Supported base models.

For information on getting an Anthropic API key, see Anthropic - Accessing the API.

Azure OpenAI

Each Azure OpenAI key is tied to a specific deployment, and each deployment comprises a single OpenAI model. So if you want to use multiple models through Azure, you will need to create a deployment for each model and then add each key to Label Studio.

For a list of the Azure OpenAI models we support, see Supported base models.

To use Azure OpenAI, you must first create the Azure OpenAI resource and then a model deployment:

From the Azure portal, create an Azure OpenAI resource.

From Azure OpenAI Studio, create a deployment. This is a base model endpoint.

When adding the key to Label Studio, you are asked for the following information:

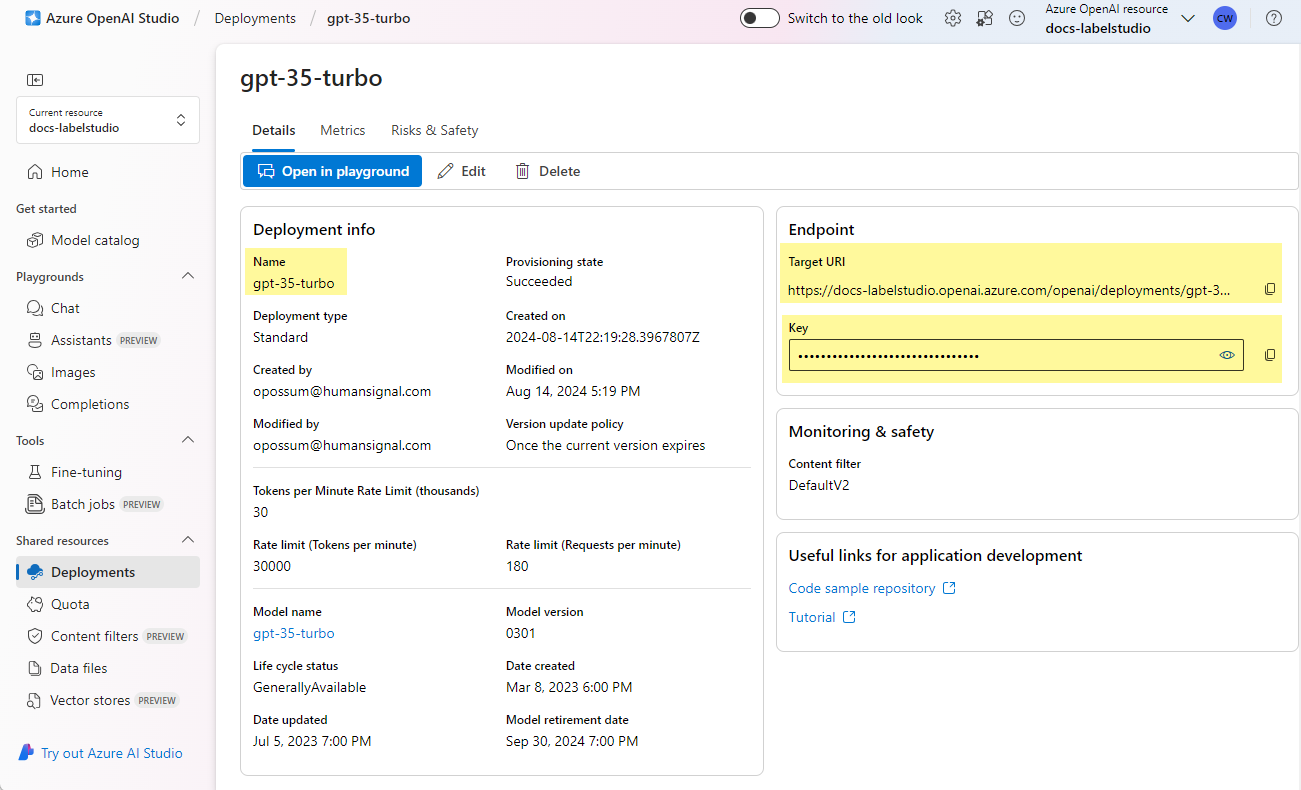

| Field | Description |

|---|---|

| Deployment | The is the name of the deployment. By default, this is the same as the model name, but you can customize it when you create the deployment. If they are different, you must use the deployment name and not the underlying model name. |

| Endpoint | This is the target URI provided by Azure. |

| API key | This is the key provided by Azure. |

You can find all this information in the Details section of the deployment in Azure OpenAI Studio.

Azure AI Foundry

Use the Azure AI Foundry model catalog to deploy a model: AI Foundry docs.

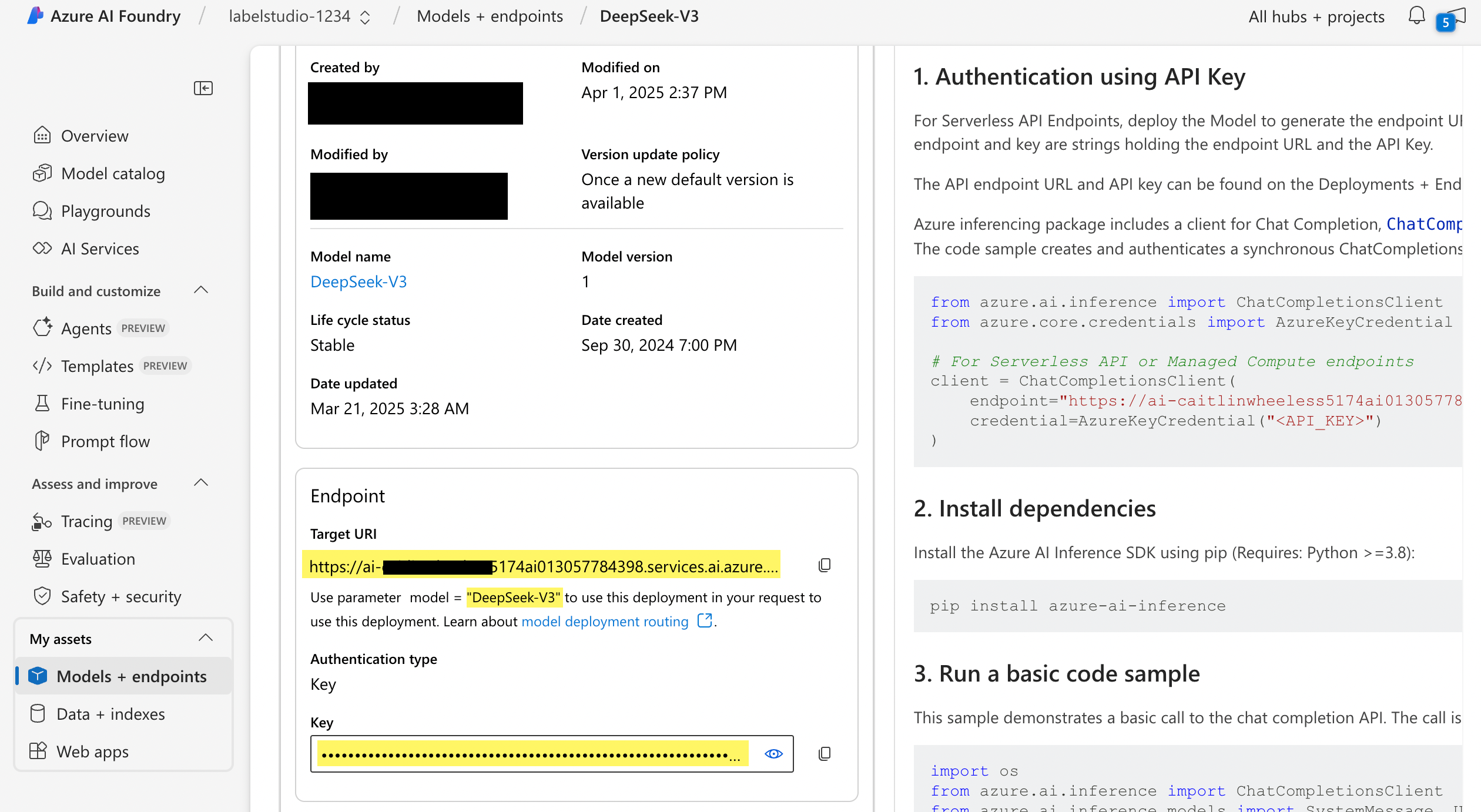

Once deployed, navigate to the Details page of the deployed model. The information you will need to set up the connection to Label Studio is under Endpoint:

When adding the key to Label Studio, you are asked for the following information:

| Field | Description |

|---|---|

| Model | The is model name. This is provided as a parameter with your endpoint information (see the screenshot above). |

| Endpoint | This is the Target URI provided by AI Foundry. |

| API key | This is the Key provided by AI Foundry. |

Custom LLM

You can use your own self-hosted and fine-tuned model as long as it meets the following criteria:

- Your server must provide JSON mode for the LLM, specifically, the API must accepts

response_formatwithtype: json_objectandschemawith a valid JSON schema:{"response_format": {"type": "json_object", "schema": <schema>}} - The server API must follow OpenAI format.

Examples of compatible LLMs include Ollama and sglang.

To add a custom model, enter the following:

- A name for the model.

- The endpoint URL for the model. For example,

https://my.openai.endpoint.com/v1 - An API key to access the model. An API key is tied to a specific account, but the access is shared within the org if added. (Optional)

- An auth token to access the model API. An auth token provides API access at the server level. (Optional)

Example with Ollama

- Setup Ollama, e.g.

ollama run llama3.2 - Verify your local OpenAI-compatible API is working, e.g.

http://localhost:11434/v1 - Create an externally facing endpoint, e.g.

https://my.openai.endpoint.com/v1->http://localhost:11434/v1 - Add connection to Label Studio:

- Name:

llama3.2(must match the model name in Ollama) - Endpoint:

https://my.openai.endpoint.com/v1(notev1suffix is required) - API key:

ollama(default) - Auth token: empty

- Name:

Example with Hugging Face Inference Endpoints

- Use DeepSeek model

- In

API Keys, add toCustomprovider:- Name:

deepseek-ai/DeepSeek-R1 - Endpoint:

https://router.huggingface.co/together/v1 - API key:

<your-hf-api-key> - Auth token: empty

- Name: